AI Mode visual search transforms how users find information through images and visual content. Search engines now analyze visual elements alongside text to deliver more accurate results.

We at Emplibot see businesses struggling to optimize for AI mode visual search effectively. Most content creators miss the technical requirements that make visual content discoverable.

This guide provides actionable strategies to boost your visual content’s search performance and drive measurable traffic growth.

How AI Mode Visual Search Works

Visual Recognition Technology

AI mode visual search processes images through multimodal AI that combines visual recognition with semantic understanding. Google’s Shopping Graph analyzes over 50 billion product listings and refreshes listings regularly to maintain accuracy. The system runs multiple simultaneous queries when users upload images and uses visual search fan-out techniques that recognize primary subjects and secondary details within milliseconds.

User Behavior Shifts

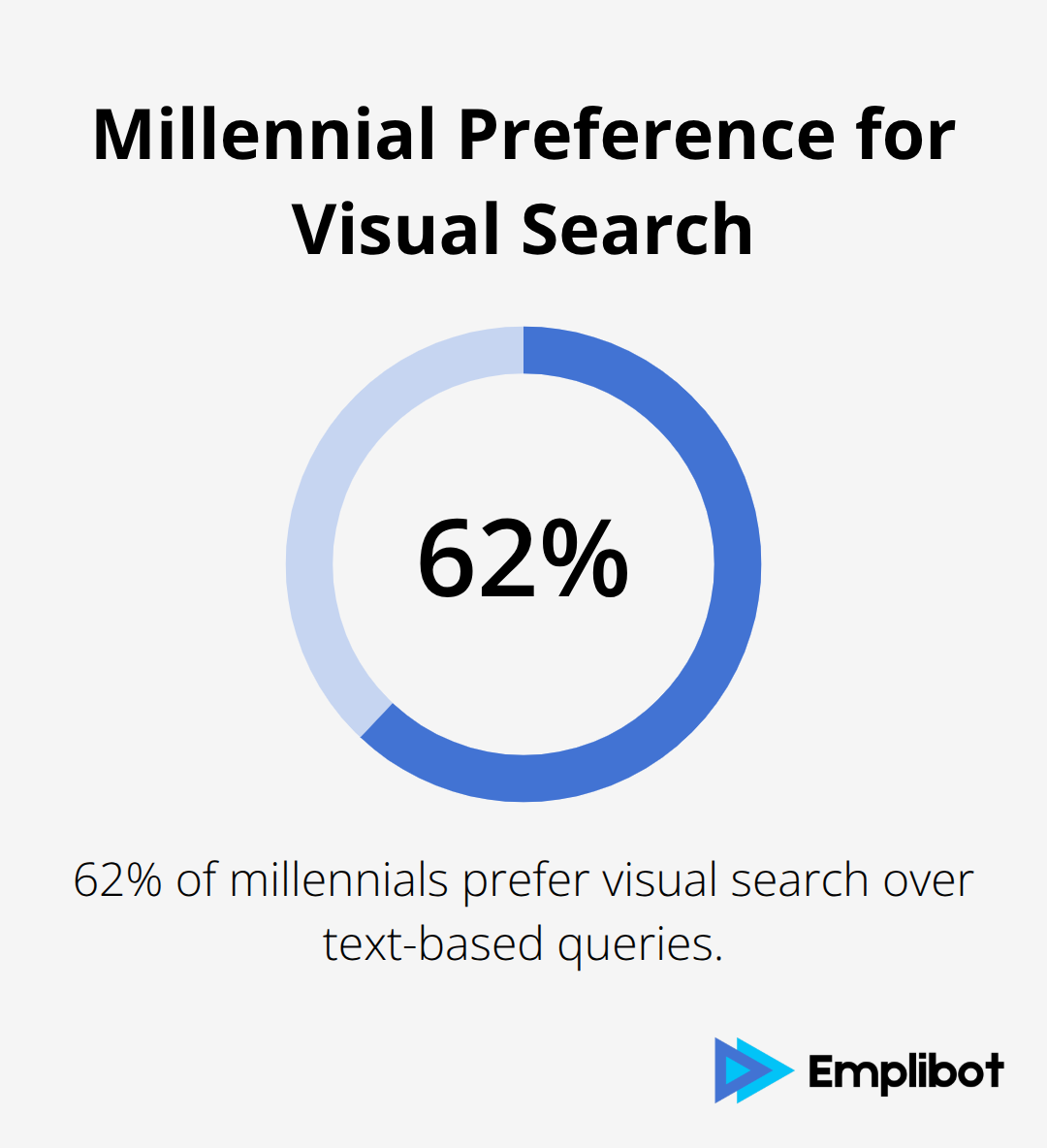

Pinterest data shows 62% of millennials prefer visual search over text-based queries, while Google reports image search usage has doubled since 2019. Users now expect search experiences that blend visual input with natural language refinement. The technology eliminates dead-end searches and provides contextually relevant results even when users cannot articulate product names or specifications.

Key Differences from Traditional Search

Traditional image search relied on filename matching and surrounding text context. AI mode visual search reads actual image content, understands spatial relationships between objects, and interprets user intent through conversational queries (like “barrel jeans that aren’t too baggy”). The system analyzes both what users see and what they mean, creating a more intuitive search experience.

Technical Processing Methods

The technology runs multiple queries simultaneously to understand visual context and deliver relevant results. Advanced multimodal capabilities from systems like GPT-5 combine with visual understanding technology to analyze images profoundly. This approach recognizes both obvious elements and subtle details that traditional search methods miss.

This shift demands content creators optimize for both visual recognition algorithms and conversational search patterns. Your images need strategic formatting, descriptive alt text with key entities, and step-by-step visual documentation that AI systems can parse effectively.

What Makes Images AI-Ready

WebP Format Powers Visual Search Performance

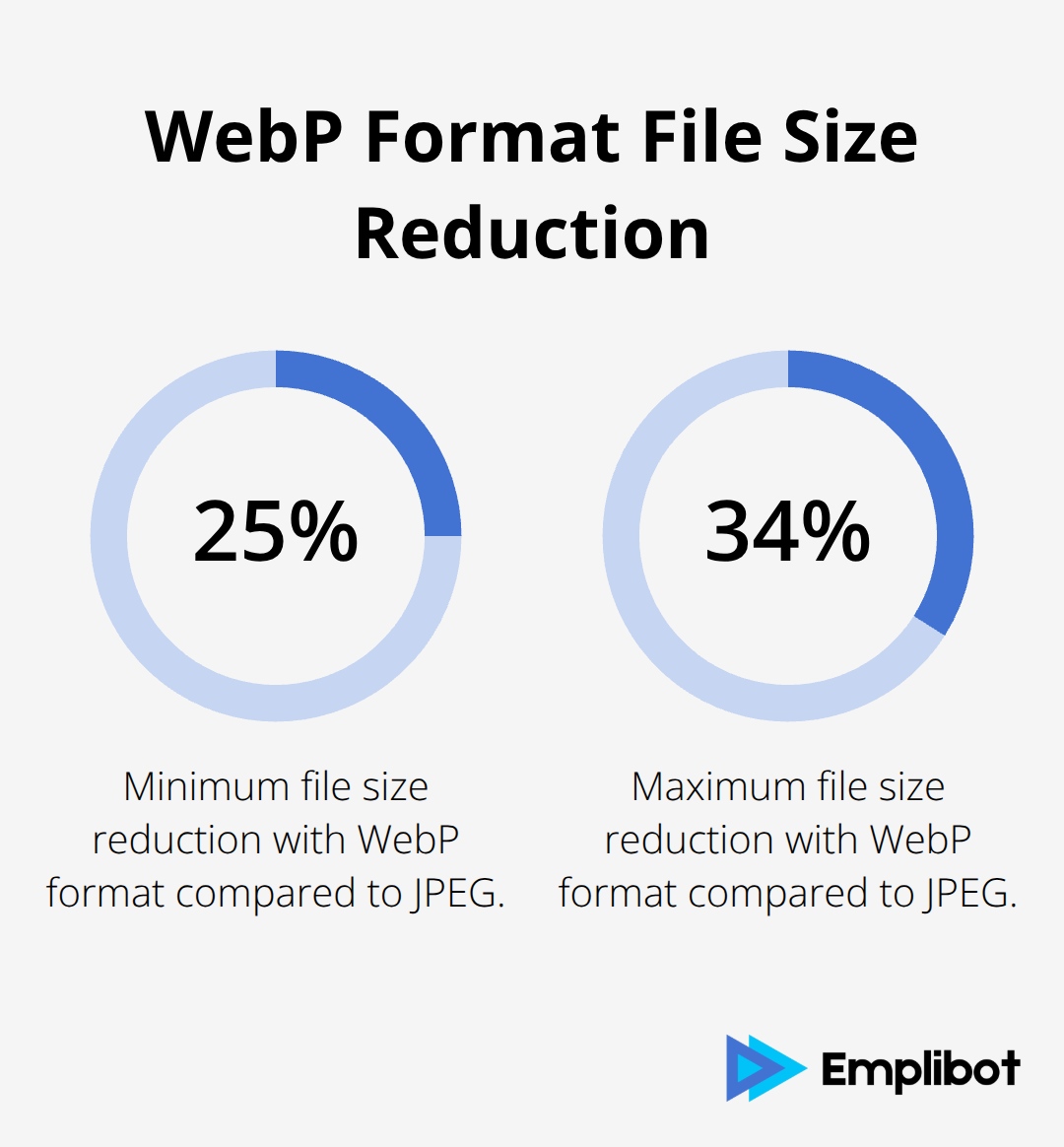

WebP format reduces image file sizes by 25-34% compared to JPEG while it maintains visual quality that AI systems require for accurate recognition. Microsoft’s Image Analysis API processes WebP images 40% faster than traditional formats, which makes load speed a ranking factor for visual search. Set image dimensions to 1200×800 pixels for optimal processing – this ratio works across desktop and mobile while it gives AI algorithms enough detail for object recognition. Compress images to 85% quality to balance file size with the visual clarity that multimodal AI needs to identify key elements and relationships within your content.

Strategic ALT Text Targets Entity Recognition

Write ALT text that focuses on the primary entity AI systems should recognize rather than generic descriptions. Instead of “person cooking food,” use “chef prepares pasta carbonara in stainless steel pan” to target specific entities that match user search queries. Keep ALT text between 10-15 words to align with how AI mode processes semantic information (longer descriptions dilute entity recognition accuracy). Include one primary keyword that matches your content topic, but avoid keyword stuffing that triggers spam detection algorithms.

Captions Drive Contextual Understanding

Place concise captions directly below images that explain the process or outcome shown in the visual content. Captions should be 15-25 words maximum and connect the image to your article’s main topic through specific action words like “demonstrates,” “shows,” or “illustrates.” AI systems use caption text to understand image context within your broader content strategy, so write captions that bridge visual elements with your written instructions. This combination helps visual search algorithms categorize your content accurately and match it to relevant user queries that seek step-by-step guidance.

These technical foundations prepare your images for AI recognition, but visual content needs strategic structure to rank effectively. The next step involves creating visual guides that AI systems can parse and users can follow seamlessly.

How Do You Structure Visuals for AI Recognition

Step-by-Step Visual Documentation

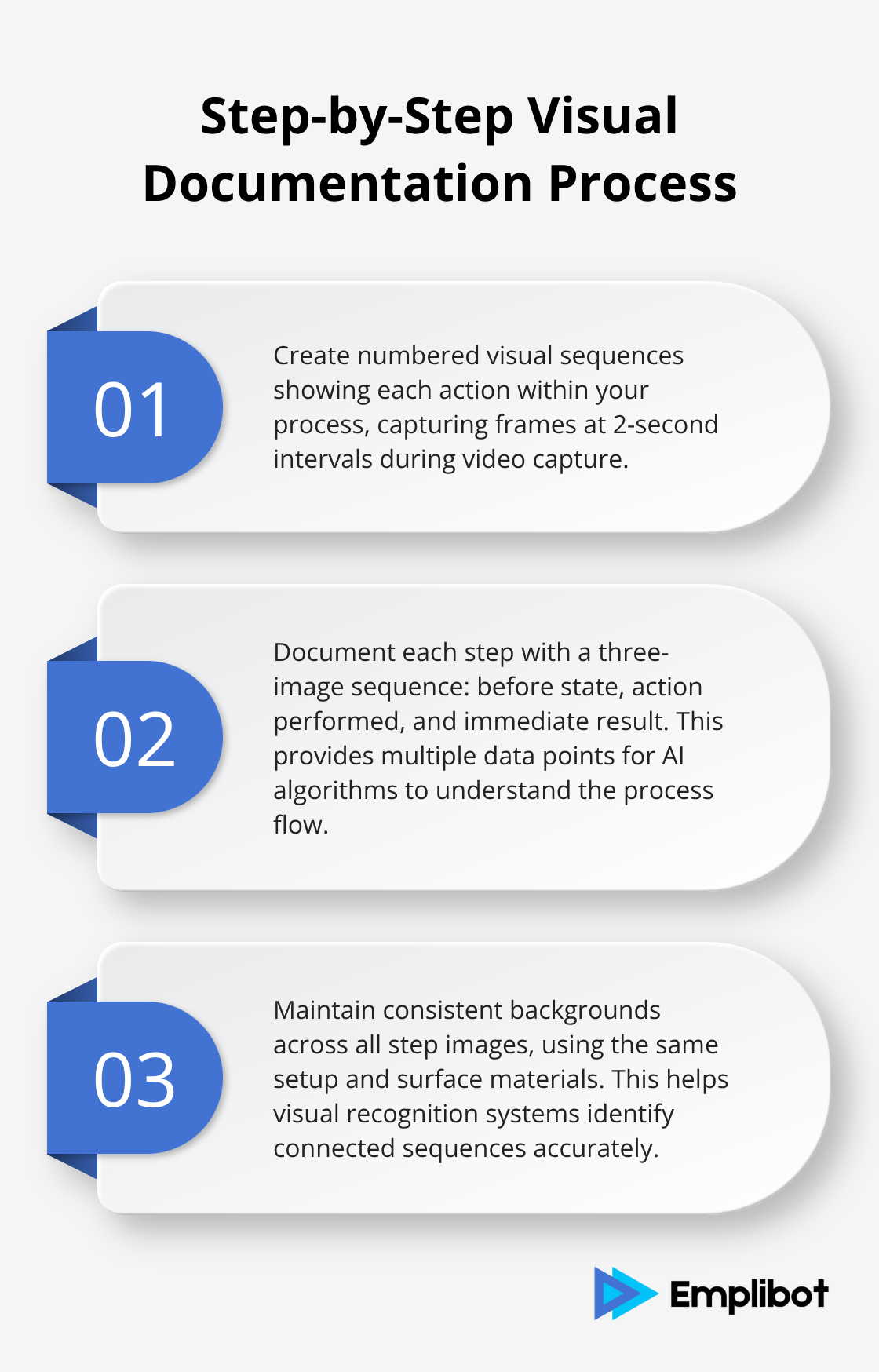

Create numbered visual sequences that show each action within your process rather than static outcome shots. Microsoft’s Image Analysis API identifies sequential patterns in visual content when images follow logical progression steps. Place action shots at 2-second intervals during video capture to extract frames that show clear hand positions, tool usage, and material changes. Position your camera at a 45-degree angle above the work surface to capture both the process and the person’s hands simultaneously – this angle provides AI systems with spatial context they need for accurate interpretation.

Document each step with images that show the before state, the action performed, and the immediate result. This three-image sequence per step gives visual search algorithms multiple data points to understand your process flow. Keep backgrounds consistent across all step images with the same setup and surface materials, because visual recognition systems flag inconsistent environments as separate processes rather than connected sequences.

Before and After Framework Implementation

Structure your how-to content with clear before and after comparison images that occupy identical positions within your layout. Dynamic Yield’s Experience OS can algorithmically match content to customer preferences when images maintain consistent dimensions and positions. Capture before images that show the complete start condition, with all tools and materials visible in the frame. Take after images from the exact same camera position with identical conditions to help AI algorithms recognize the transformation your content demonstrates.

Insert transition images between your before and after shots that highlight the specific change that occurs. These intermediate visuals should focus on the exact moment of transformation – paint application, ingredient mix, or component assembly. AI mode visual search uses these transition points to understand causation relationships within your instructional content (which improves search results for queries that seek specific problem-solution guidance).

Visual Prompt Testing Strategy

Test your visual content when you upload images to Google’s AI mode and analyze which elements the system identifies correctly. Run searches with your step-by-step images as queries to verify that AI systems recognize the tools, materials, and actions you intended to highlight. Check that your before and after comparisons trigger relevant related searches rather than generic results that indicate poor visual clarity or context.

Validate all referenced links within your visual content when you test each URL through multiple devices and connection speeds. Links embedded in image captions or alt text must load within 2 seconds to maintain user engagement rates that support search factors. Replace any broken or slow references immediately, because AI systems factor link reliability into content quality assessments that determine visual search visibility (making this step essential for maintaining your content’s discoverability).

Final Thoughts

AI mode visual search optimization transforms your content performance when you implement WebP format images at 1200×800 pixels with strategic ALT text that targets key entities. Your captions must connect visual elements to content topics through specific action words while you maintain 15-25 word limits. Step-by-step visual documentation with consistent backgrounds and before-after frameworks help AI systems understand process flows and transformations.

These optimization strategies deliver measurable content marketing ROI through increased visual search visibility and reduced bounce rates. Businesses that implement proper visual search optimization see 40% faster image processing speeds and improved user engagement metrics that drive conversions. Start your audit of existing visual content against these technical requirements to optimize for AI mode visual search effectively.

Test your images through AI mode searches to verify recognition accuracy and validate all referenced links for speed and reliability. Emplibot automates content creation and SEO optimization across WordPress and social media platforms while you focus on implementing these visual search strategies. Monitor your visual search performance through analytics that track image-based traffic sources and user interaction patterns with your step-by-step guides (this data reveals which visual elements drive the highest engagement rates).