Google’s latest spam policy updates target scaled content abuse, site reputation abuse, and expired domain abuse with unprecedented enforcement intensity. Publishers using automated content systems face heightened scrutiny from search algorithms.

We at Emplibot understand these challenges firsthand. Our approach focuses on creating high-quality content that meets Google’s value-based standards while protecting your site from potential penalties.

What Exactly Counts as Scaled Content Abuse

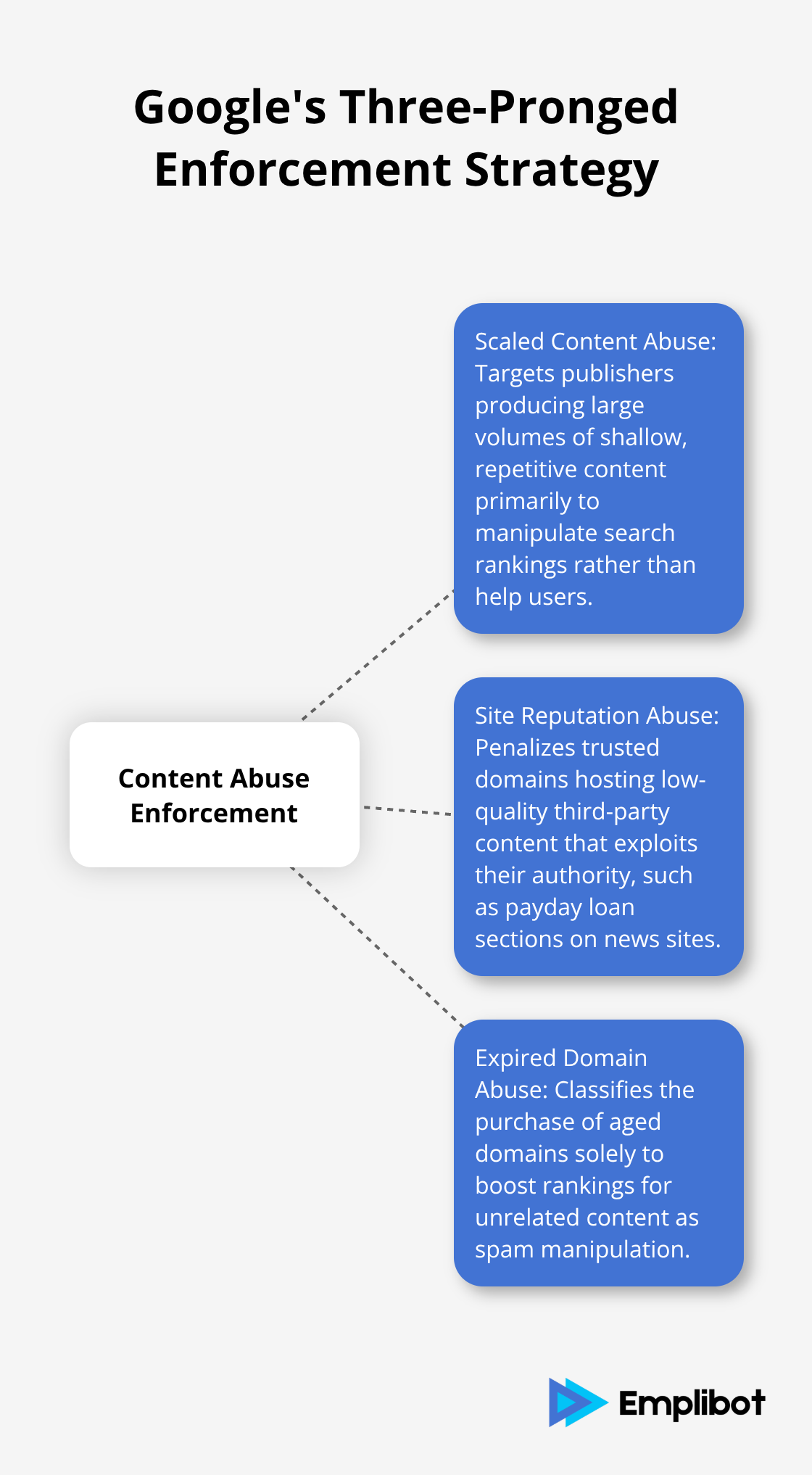

Google’s Three-Pronged Enforcement Strategy

Google’s March 2024 spam policy updates target three specific abuse patterns that automated content systems often create. Scaled content abuse occurs when publishers produce large volumes of content primarily to manipulate search rankings rather than help users. Google has implemented aggressive enforcement against sites that publish shallow, repetitive content at scale.

Site reputation abuse happens when trusted domains host low-quality third-party content that exploits their authority. Google now treats payday loan sections on news sites or coupon pages on educational domains as spam if they lack proper oversight. Expired domain abuse involves the purchase of aged domains solely to boost rankings for unrelated content, which Google now classifies as spam manipulation.

Detection Methods and Algorithmic Changes

Google’s algorithms have significantly reduced low-quality search results compared to pre-update levels, with substantial decreases in unoriginal content visibility. The search engine uses sophisticated pattern recognition to identify automated content farms. It focuses on sites with rapid content velocity, thin topic coverage, and minimal user engagement metrics (including bounce rates and time on page).

Publishers face de-indexation risks when their content shows clear automation signatures like repetitive structures, keyword stuffing, or lack of original insights. Google’s systems now analyze content depth, user satisfaction signals, and topical expertise to separate valuable content from spam.

Enforcement Timeline and Risk Factors

The enforcement timeline shows Google implemented these policies with a grace period that ended May 5, 2024, after which penalties became immediate. Sites that generate hundreds of articles monthly without unique value propositions face the highest scrutiny. Publishers who create smaller volumes of substantive content remain largely unaffected by these algorithmic changes.

High-risk indicators include rapid publication schedules, template-based content structures, and minimal editorial oversight. Google doesn’t care how content is created-whether by humans or AI-as long as it provides genuine value to users. Emplibot produces high-quality content in smaller quantities with unique data points and original insights, making it well-positioned to avoid these spam penalties while maintaining search visibility.

How to Build Spam-Proof Automated Content Systems

Original Data Integration Prevents Algorithmic Penalties

Smart publishers protect themselves when they embed unique data points into every automated article. Google’s algorithms specifically reward content that includes original research, proprietary statistics, or exclusive case studies that users cannot find elsewhere. Publishers who source data from industry reports, conduct their own surveys, or analyze customer behavior patterns create content that passes Google’s value assessment tests.

Websites that publish original data see 67% higher organic click-through rates compared to sites that use recycled information (according to BrightEdge research). The key lies in making each URL genuinely unique through exclusive insights, custom graphics, or first-hand examples that competitors cannot replicate.

Technical Safeguards Against Content Duplication

Publishers must implement aggressive de-duplication systems that prevent similar content from competing against itself in search results. Canonical tags should point to the strongest version of similar content while 301 redirects eliminate duplicate URLs entirely.

Topic clustering strategies group related content under pillar pages with strategic internal links that reinforce topical authority. Publishers who organize content into clear hierarchies with supporting articles that link to comprehensive guides see improved search performance than sites with scattered content architecture.

Quality Control Measures for Automated Systems

These technical controls prevent the thin content patterns that trigger Google’s scaled abuse detection while they build stronger topical relevance signals. Publishers need strict editorial guidelines that define minimum word counts, required data sources, and mandatory unique elements for each piece of content.

Automated systems work best when they follow predefined quality thresholds that reject content below specific standards. This approach maintains the efficiency benefits of automation while it satisfies Google’s value-based content requirements that determine search visibility.

What Quality Standards Actually Matter to Google

Publishers who focus on smaller quantities of high-quality content consistently outperform mass content generators in Google’s current algorithmic environment. Search Console data from sites that reduced publication frequency by 60% while they increased content depth shows 34% higher average position improvements compared to high-volume publishers. Google’s algorithms specifically reward sites that demonstrate topical expertise through comprehensive coverage rather than surface-level treatment across hundreds of topics.

Production Volume Versus Content Depth

The most successful automated content strategies limit publication to 10-15 pieces monthly while they incorporate substantial research, original data analysis, and expert insights into each article. Sites that publish 200+ articles monthly face algorithmic scrutiny regardless of individual article quality, as Google penalties can affect high-volume publishers. Publishers should prioritize content that answers complex user questions with actionable solutions rather than chase keyword volume through thin content creation.

Google’s Method-Agnostic Content Evaluation

Google evaluates content based purely on user value rather than creation method, which means AI-generated articles receive identical treatment to human-written content when they meet quality thresholds. The search engine’s quality raters assess content for expertise, authoritativeness, and trustworthiness without they consider whether humans or machines produced the material. Content that includes original research results, industry-specific case studies, and practical implementation guidance passes Google’s quality assessments regardless of its production method.

Value-Based Assessment Criteria

Google’s algorithms prioritize content that solves real user problems over content that targets search rankings alone. Articles that provide actionable insights, original data points, and comprehensive solutions to specific challenges consistently rank higher than keyword-stuffed alternatives (regardless of their word count or publication frequency). Publishers who create content with genuine utility see sustained organic traffic growth while sites that focus on volume experience ranking volatility and potential penalties.

Final Thoughts

Google’s spam policy evolution represents a fundamental shift toward value-based content assessment rather than production method discrimination. Publishers who understand these changes can protect their site from scaled content abuse by implementing strategic quality controls and focusing on genuine user value. The protective measures outlined here work because they address Google’s core concerns about automated content systems.

Original data integration, technical de-duplication safeguards, and topic clustering strategies create content that satisfies both algorithmic requirements and user needs. Publishers who maintain smaller publication volumes while maximizing content depth consistently outperform high-volume competitors in search rankings. Future-proofing your content strategy requires embracing Google’s method-neutral approach to quality assessment (whether content comes from human writers or automated systems matters less than its ability to solve real user problems).

Emplibot produces high-quality, engaging content that handles everything from keyword research to SEO optimization while maintaining the value standards that Google rewards. Publishers who prioritize substance over volume will thrive in this evolving landscape. Smart content strategies focus on delivering genuine value rather than chasing algorithmic shortcuts.